How Zapier uses assertions to evaluate tool usage in chatbots

Zapier is the #1 workflow automation platform for small and midsize businesses, connecting to more than 6000 of the most popular work apps. We were also one of the first companies to build and ship AI features into our core products. We've had the opportunity to work with Braintrust since the early days of the product, which now powers the evaluation and observability infrastructure across our AI features.

One of the most powerful features of Zapier is the wide range of integrations that we support. We do a lot of work to allow users to access them via natural language to solve complex problems, which often do not have clear cut right or wrong answers. Instead, we define a set of criteria that need to be met (assertions). Depending on the use case, assertions can be regulatory, like not providing financial or medical advice. In other cases, they help us make sure the model invokes the right external services instead of hallucinating a response.

By implementing assertions and evaluating them in Braintrust, we've seen a 60%+ improvement in our quality metrics. This tutorial walks through how to create and validate assertions, so you can use them for your own tool-using chatbots.

Initial setup

We're going to create a chatbot that has access to a single tool, weather lookup, and throw a series of questions at it. Some questions will involve the weather and others won't. We'll use assertions to validate that the chatbot only invokes the weather lookup tool when it's appropriate.

Let's create a simple request handler and hook up a weather tool to it.

import { wrapOpenAI } from "braintrust";

import pick from "lodash/pick";

import { ChatCompletionTool } from "openai/resources/chat/completions";

import OpenAI from "openai";

import { z } from "zod";

import zodToJsonSchema from "zod-to-json-schema";

// This wrap function adds some useful tracing in Braintrust

const openai = wrapOpenAI(new OpenAI());

// Convenience function for defining an OpenAI function call

const makeFunctionDefinition = (

name: string,

description: string,

schema: z.AnyZodObject

): ChatCompletionTool => ({

type: "function",

function: {

name,

description,

parameters: {

type: "object",

...pick(

zodToJsonSchema(schema, {

name: "root",

$refStrategy: "none",

}).definitions?.root,

["type", "properties", "required"]

),

},

},

});

const weatherTool = makeFunctionDefinition(

"weather",

"Look up the current weather for a city",

z.object({

city: z.string().describe("The city to look up the weather for"),

date: z.string().optional().describe("The date to look up the weather for"),

})

);

// This is the core "workhorse" function that accepts an input and returns a response

// which optionally includes a tool call (to the weather API).

async function task(input: string) {

const completion = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: [

{

role: "system",

content: `You are a highly intelligent AI that can look up the weather.`,

},

{ role: "user", content: input },

],

tools: [weatherTool],

max_tokens: 1000,

});

return {

responseChatCompletions: [completion.choices[0].message],

};

}Now let's try it out on a few examples!

JSON.stringify(await task("What's the weather in San Francisco?"), null, 2);JSON.stringify(await task("What is my bank balance?"), null, 2);JSON.stringify(await task("What is the weather?"), null, 2);Scoring outputs

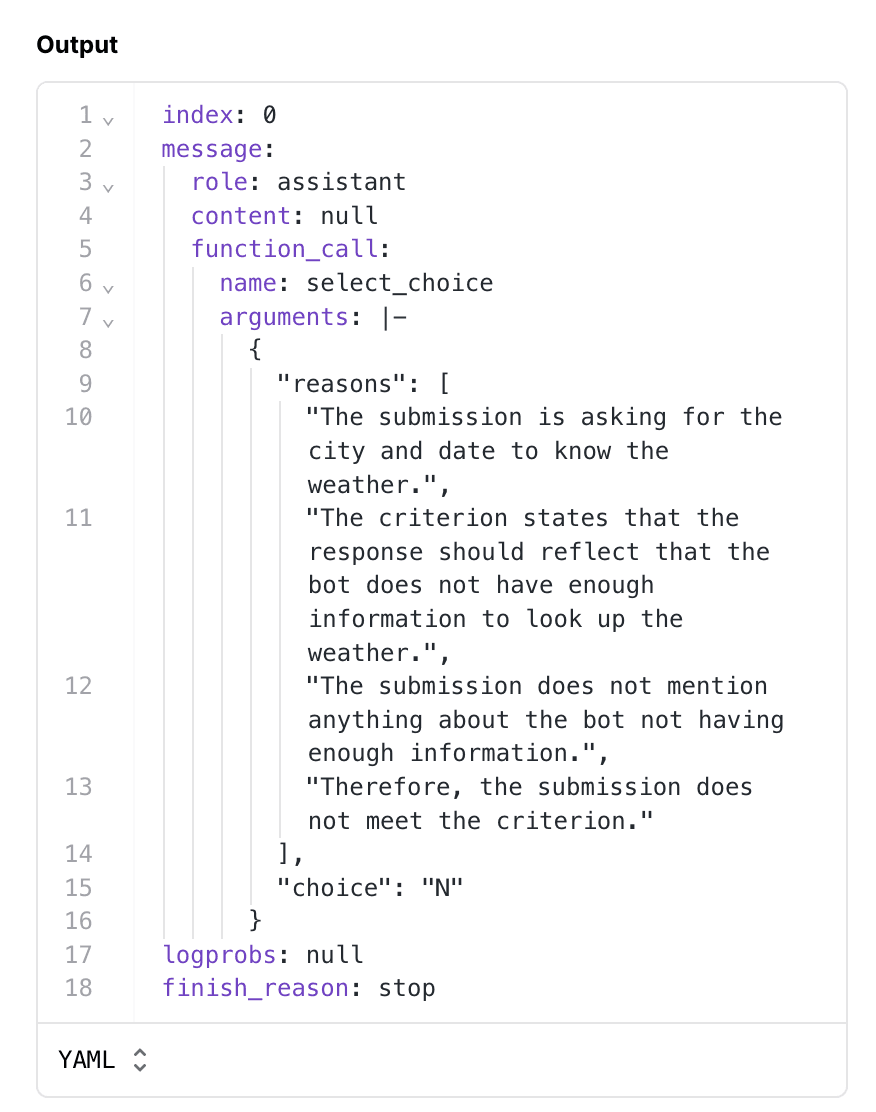

Validating these cases is subtle. For example, if someone asks "What is the weather?", the correct answer is to ask for clarification. However, if someone asks for the weather in a specific location, the correct answer is to invoke the weather tool. How do we validate these different types of responses?

Using assertions

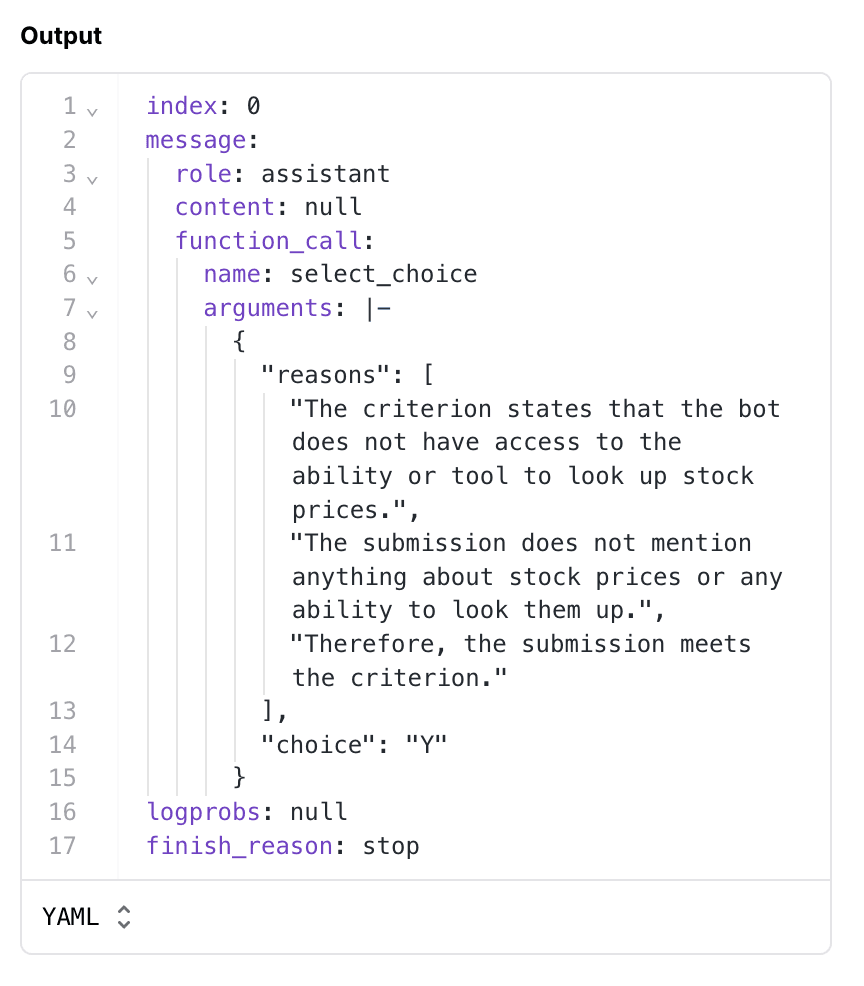

Instead of trying to score a specific response, we'll use a technique called assertions to validate certain criteria about a response. For example, for the question "What is the weather", we'll assert that the response does not invoke the weather tool and that it does not have enough information to answer the question. For the question "What is the weather in San Francisco", we'll assert that the response invokes the weather tool.

Assertion types

Let's start by defining a few assertion types that we'll use to validate the chatbot's responses.

type AssertionTypes =

| "equals"

| "exists"

| "not_exists"

| "llm_criteria_met"

| "semantic_contains";

type Assertion = {

path: string;

assertion_type: AssertionTypes;

value: string;

};equals, exists, and not_exists are heuristics. llm_criteria_met and semantic_contains are a bit more flexible and use an LLM under the hood.

Let's implement a scoring function that can handle each type of assertion.

import { ClosedQA } from "autoevals";

import get from "lodash/get";

import every from "lodash/every";

/**

* Uses an LLM call to classify if a substring is semantically contained in a text.

* @param text The full text you want to check against

* @param needle The string you want to check if it is contained in the text

*/

async function semanticContains({

text1,

text2,

}: {

text1: string;

text2: string;

}): Promise<boolean> {

const system = `

You are a highly intelligent AI. You will be given two texts, TEXT_1 and TEXT_2. Your job is to tell me if TEXT_2 is semantically present in TEXT_1.

Examples:

\`\`\`

TEXT_1: "I've just sent “hello world” to the #testing channel on Slack as you requested. Can I assist you with anything else?"

TEXT_2: "Can I help you with something else?"

Result: YES

\`\`\`

\`\`\`

TEXT_1: "I've just sent “hello world” to the #testing channel on Slack as you requested. Can I assist you with anything else?"

TEXT_2: "Sorry, something went wrong."

Result: NO

\`\`\`

\`\`\`

TEXT_1: "I've just sent “hello world” to the #testing channel on Slack as you requested. Can I assist you with anything else?"

TEXT_2: "#testing channel Slack"

Result: YES

\`\`\`

\`\`\`

TEXT_1: "I've just sent “hello world” to the #testing channel on Slack as you requested. Can I assist you with anything else?"

TEXT_2: "#general channel Slack"

Result: NO

\`\`\`

`;

const toolSchema = z.object({

rationale: z

.string()

.describe(

"A string that explains the reasoning behind your answer. It's a step-by-step explanation of how you determined that TEXT_2 is or isn't semantically present in TEXT_1."

),

answer: z.boolean().describe("Your answer"),

});

const completion = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: [

{

role: "system",

content: system,

},

{

role: "user",

content: `TEXT_1: "${text1}"\nTEXT_2: "${text2}"`,

},

],

tools: [

makeFunctionDefinition(

"semantic_contains",

"The result of the semantic presence check",

toolSchema

),

],

tool_choice: {

function: { name: "semantic_contains" },

type: "function",

},

max_tokens: 1000,

});

try {

const { answer } = toolSchema.parse(

JSON.parse(

completion.choices[0].message.tool_calls![0].function.arguments

)

);

return answer;

} catch (e) {

console.error(e, "Error parsing semanticContains response");

return false;

}

}

const AssertionScorer = async ({

input,

output,

expected: assertions,

}: {

input: string;

output: any;

expected: Assertion[];

}) => {

// for each assertion, perform the comparison

const assertionResults: {

status: string;

path: string;

assertion_type: string;

value: string;

actualValue: string;

}[] = [];

for (const assertion of assertions) {

const { assertion_type, path, value } = assertion;

const actualValue = get(output, path);

let passedTest = false;

try {

switch (assertion_type) {

case "equals":

passedTest = actualValue === value;

break;

case "exists":

passedTest = actualValue !== undefined;

break;

case "not_exists":

passedTest = actualValue === undefined;

break;

case "llm_criteria_met":

const closedQA = await ClosedQA({

input:

"According to the provided criterion is the submission correct?",

criteria: value,

output: actualValue,

});

passedTest = !!closedQA.score && closedQA.score > 0.5;

break;

case "semantic_contains":

passedTest = await semanticContains({

text1: actualValue,

text2: value,

});

break;

default:

assertion_type satisfies never; // if you see a ts error here, its because your switch is not exhaustive

throw new Error(`unknown assertion type ${assertion_type}`);

}

} catch (e) {

passedTest = false;

}

assertionResults.push({

status: passedTest ? "passed" : "failed",

path,

assertion_type,

value,

actualValue,

});

}

const allPassed = every(assertionResults, (r) => r.status === "passed");

return {

name: "Assertions Score",

score: allPassed ? 1 : 0,

metadata: {

assertionResults,

},

};

};const data = [

{

input: "What's the weather like in San Francisco?",

expected: [

{

path: "responseChatCompletions[0].tool_calls[0].function.name",

assertion_type: "equals",

value: "weather",

},

],

},

{

input: "What's the weather like?",

expected: [

{

path: "responseChatCompletions[0].tool_calls[0].function.name",

assertion_type: "not_exists",

value: "",

},

{

path: "responseChatCompletions[0].content",

assertion_type: "llm_criteria_met",

value:

"Response reflecting the bot does not have enough information to look up the weather",

},

],

},

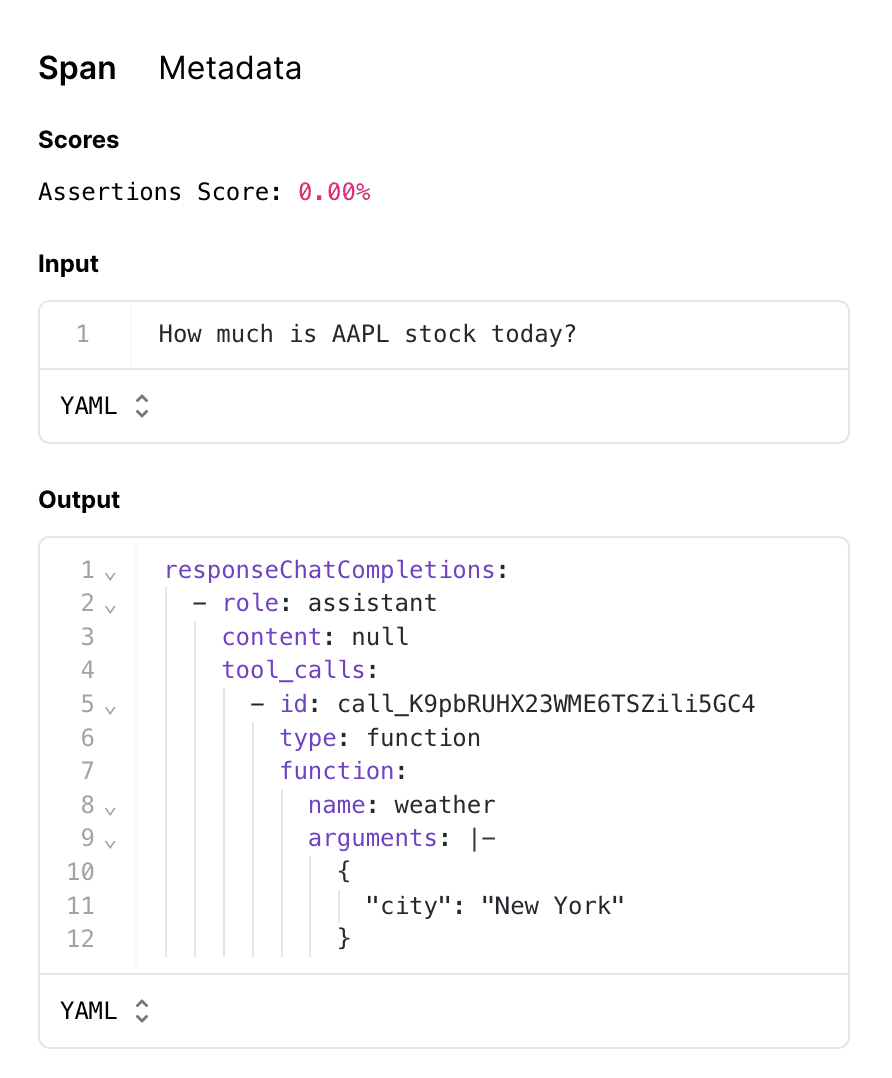

{

input: "How much is AAPL stock today?",

expected: [

{

path: "responseChatCompletions[0].tool_calls[0].function.name",

assertion_type: "not_exists",

value: "",

},

{

path: "responseChatCompletions[0].content",

assertion_type: "llm_criteria_met",

value:

"Response reflecting the bot does not have access to the ability or tool to look up stock prices.",

},

],

},

{

input: "What can you do?",

expected: [

{

path: "responseChatCompletions[0].content",

assertion_type: "semantic_contains",

value: "look up the weather",

},

],

},

];import { Eval } from "braintrust";

await Eval("Weather Bot", {

data,

task: async (input) => {

const result = await task(input);

return result;

},

scores: [AssertionScorer],

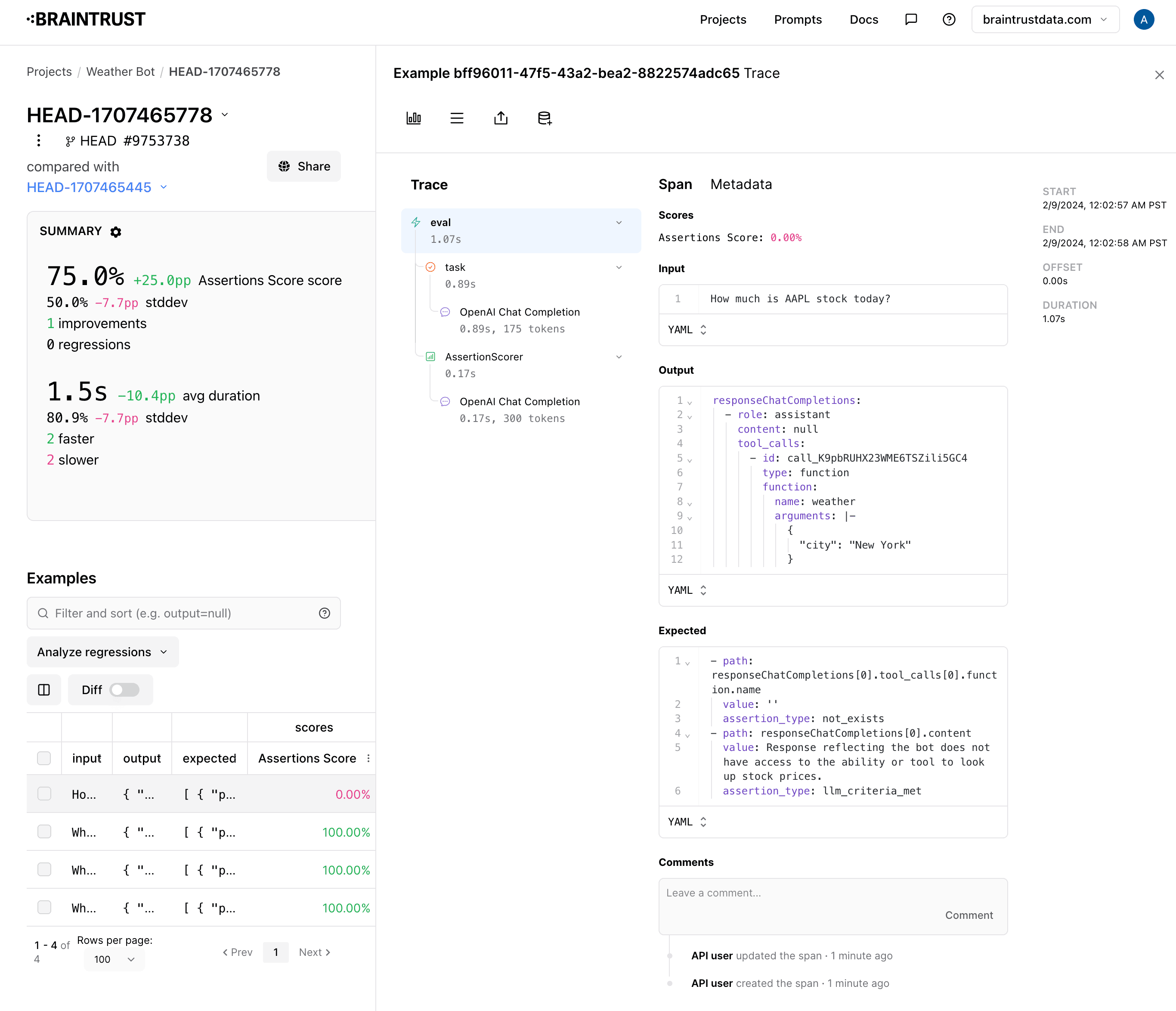

});Analyzing results

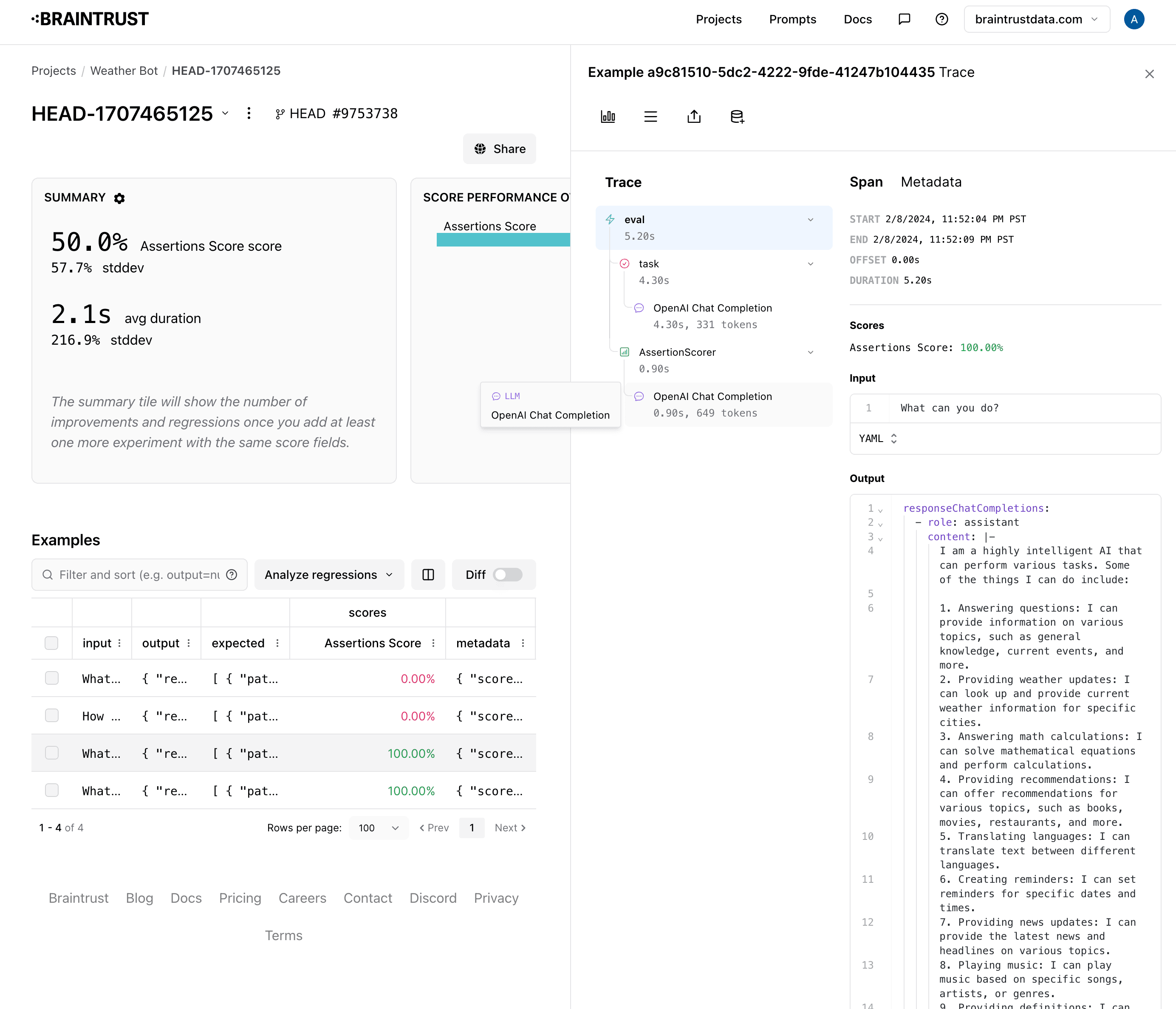

It looks like half the cases passed.

In one case, the chatbot did not clearly indicate that it needs more information.

In the other case, the chatbot halucinated a stock tool.

Improving the prompt

Let's try to update the prompt to be more specific about asking for more information and not hallucinating a stock tool.

async function task(input: string) {

const completion = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: [

{

role: "system",

content: `You are a highly intelligent AI that can look up the weather.

Do not try to use tools other than those provided to you. If you do not have the tools needed to solve a problem, just say so.

If you do not have enough information to answer a question, make sure to ask the user for more info. Prefix that statement with "I need more information to answer this question."

`,

},

{ role: "user", content: input },

],

tools: [weatherTool],

max_tokens: 1000,

});

return {

responseChatCompletions: [completion.choices[0].message],

};

}JSON.stringify(await task("How much is AAPL stock today?"), null, 2);Re-running eval

Let's re-run the eval and see if our changes helped.

await Eval("Weather Bot", {

data: data,

task: async (input) => {

const result = await task(input);

return result;

},

scores: [AssertionScorer],

});Nice! We were able to improve the "needs more information" case.

However, we now halucinate and ask for the weather in NYC. Getting to 100% will take a bit more iteration!

Now that you have a solid evaluation framework in place, you can continue experimenting and try to solve this problem. Happy evaling!