Human review

Although Braintrust helps you automatically evaluate AI software, human review is a critical part of the process. Braintrust seamlessly integrates human feedback from end users, subject matter experts, and product teams in one place. You can use human review to evaluate/compare experiments, assess the efficacy of your automated scoring methods, and curate log events to use in your evals.

Configuring human review

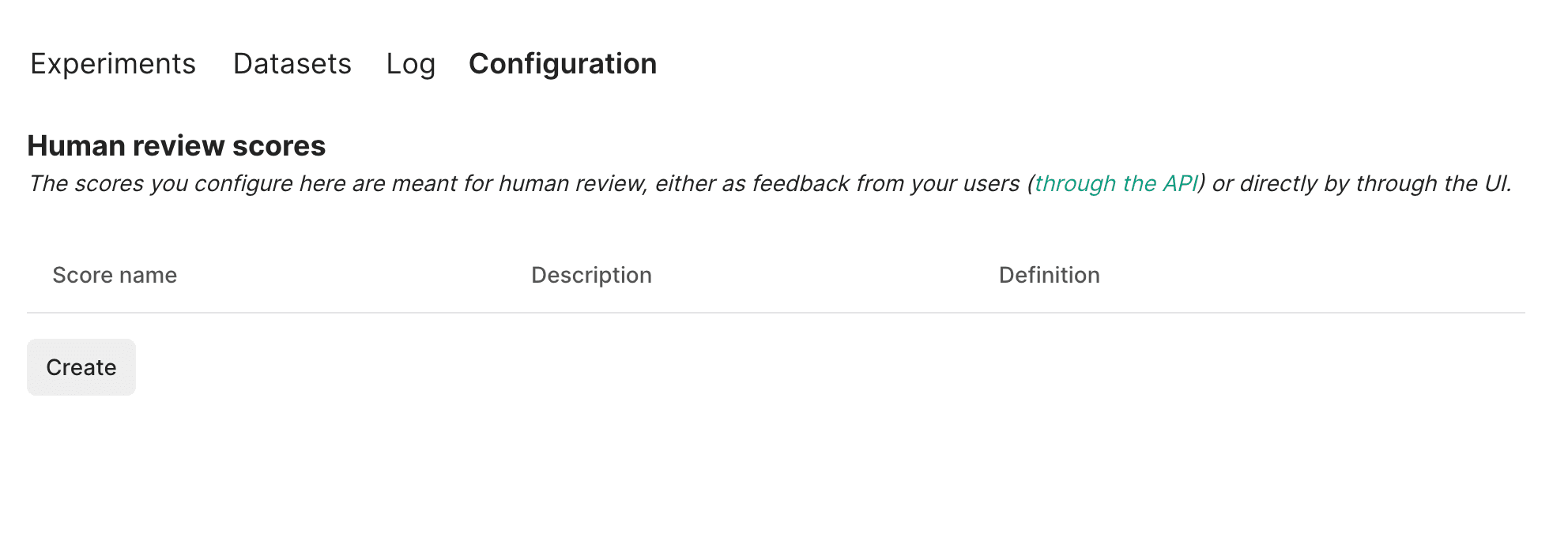

To setup human review, you just need to define the scores you want to collect in the project's "Configuration" tab.

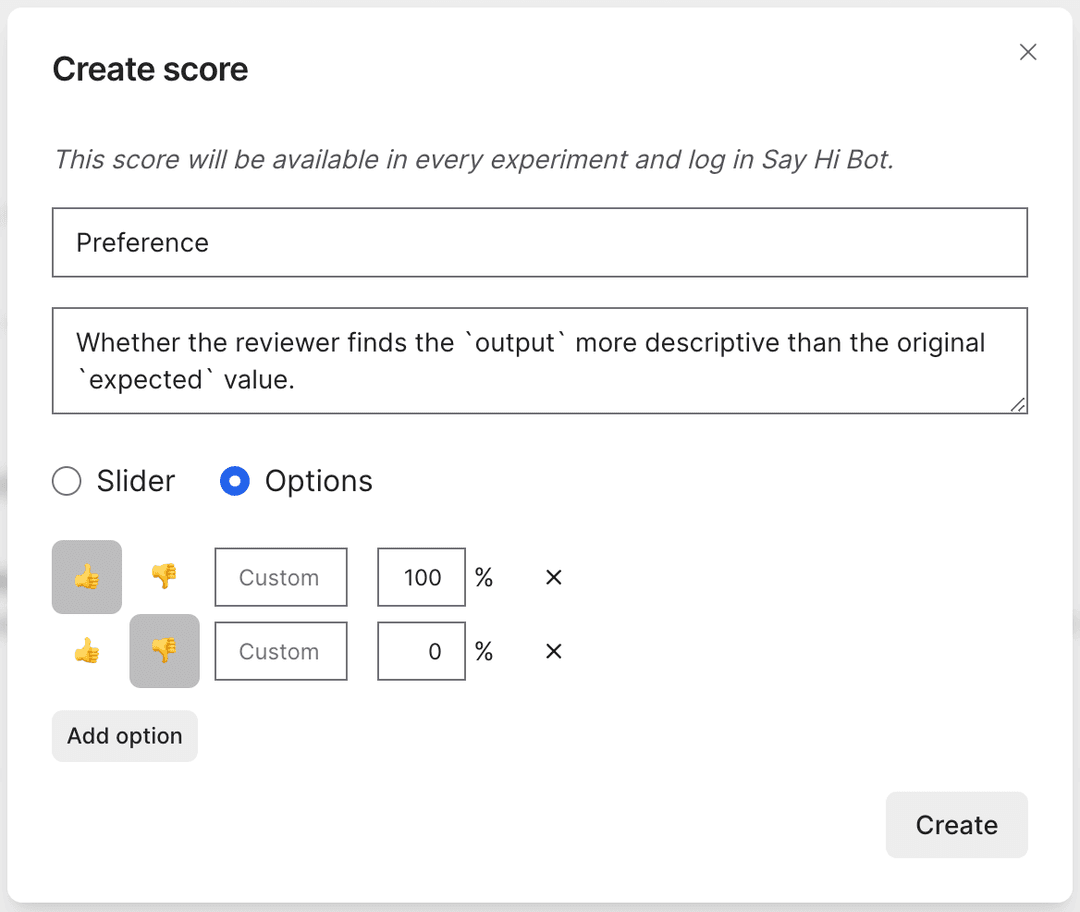

Click "Create" to configure a new score. A score can either be a continuous value between 0

and 1, with a slider input control, or a categorical value where you can define the possible

options and their scores.

Once you create a score, it will automatically appear in the "Scores" section in each experiment and log event throughout the project.

Reviewing experiments

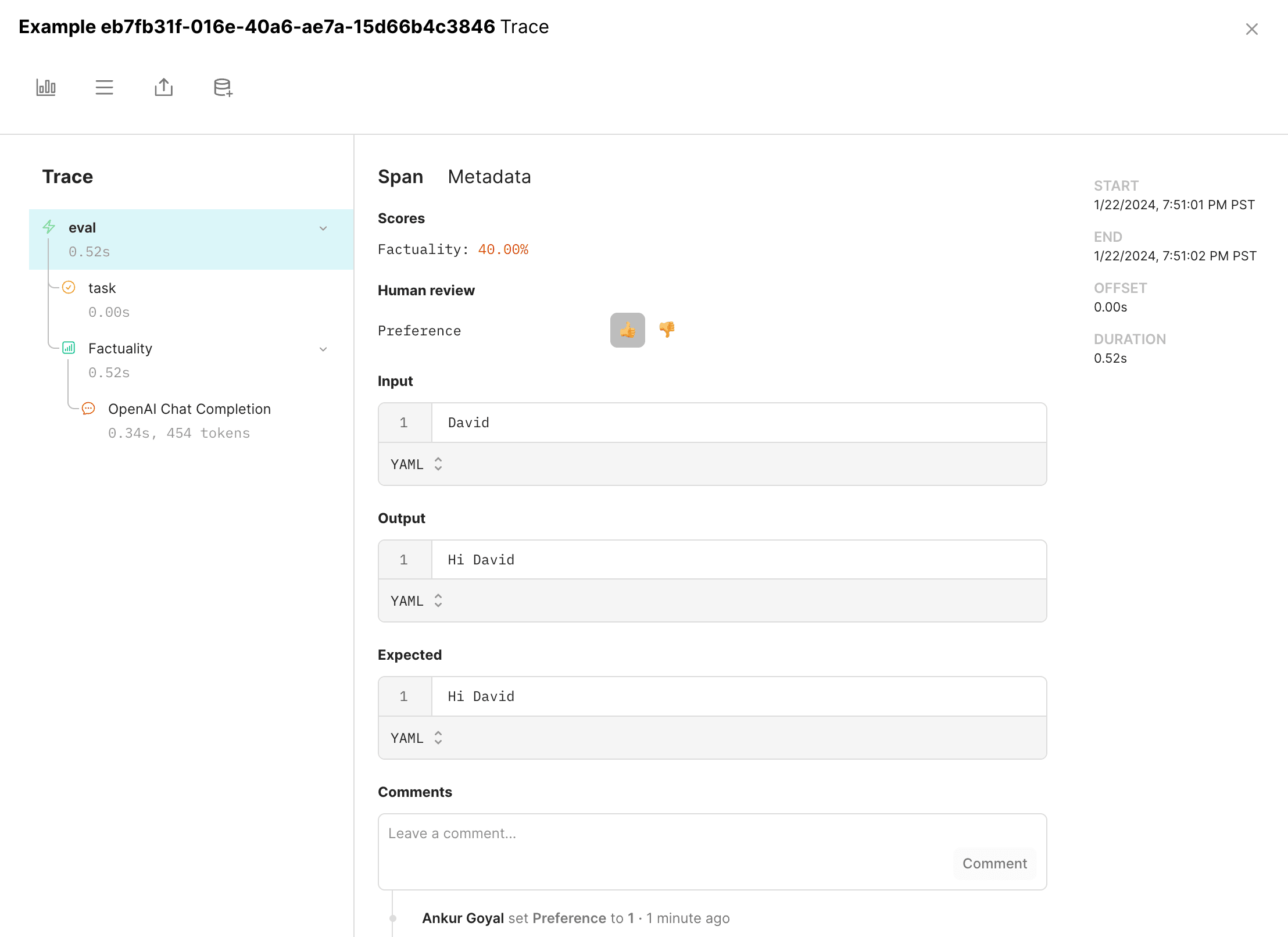

To manually review results in an experiment, simply click on an example in the Experiment view, and you'll see the manual scores you defined in the expanded trace view.

As you set scores, they will be automatically saved and reflected in the experiment summary.

Reviewing log events

The same interaction works for logs.

You can filter on log events with specific scores by typing a filter like

scores.Greatness > 0.75 in the filter bar, and then add the matching log

events to a dataset to use in your evals. This is a powerful way to utilize

human feedback to improve your evals.

Commenting and correcting values

In addition to setting scores, you can also add comments to spans and update their expected values. These updates

are tracked alongside score updates to form an audit trail of edits to a span.

Capturing end-user feedback

The same set of updates — scores, comments, and expected values — can be captured from end-users as well. See the User feedback for more details.